Motivation

Human pose tracking and full body pose estimation and reconstruction in outdoor, unstructured environments is a highly relevant and challenging problem. Its wide range of applications includes search and rescue, managing large public gatherings, and coordinating outdoor sports events. In indoor settings, similar applications usually make use of body-mounted sensors, artificial markers and static cameras. While such markers might still be usable in outdoor scenarios, dynamic ambient lighting conditions and the impossibility of having environment-fixed cameras make the overall problem difficult. On the other hand, body-mounted sensors are not feasible in several situations (e.g., large crowds of people). Therefore, our approach to the aforementioned problem involves a team of micro aerial vehicles (MAVs) tracking subjects by using only on-board monocular cameras and computational units, without any subject-fixed sensor or marker.

Methodolgie

Control

Autonomous MoCap systems rely on robots with on-board cameras that can localize and navigate autonomously. More importantly, these robots must detect, track and follow the subject (human or animal) in real time. Thus, a key component of such a system is motion planning and control of multiple robots that ensures optimal perception of the subject while obeying other constraints, e.g., inter-robot and static obstacle collision avoidance.

Our approach to this formation control problem is based on model predictive control (MPC). An important challenge is to handle collision avoidance as the constraint itself is non-convex and leads to local minima that are not easily identifiable. A possible approach is to treat it as a separate planning module that modifies the MPC-generated optimization trajectory using potential fields. This leads to sub-optimal trajectories and field local minima. In our work [5] we provide a holistic solution to this problem. Instead of directly using repulsive potential field functions to avoid obstacles, we replace them by their exact value at every iteration of the MPC and treat them as external input forces in the system dynamics. Thus, the problem remains convex at every time step. As long as a feasible solution exists for the optimization, obstacle avoidance is guaranteed. Even though field local minima issues remain, they become easier to identify and resolve. To this end, we propose and validate multiple strategies.

We further address the complete problem of perception-driven formation control of multiple aerial robots for tracking a human using multiple aerial vehicles [3]. For this, a decentralized convex MPC is developed that generates collision free formation motion plans while minimizing the jointly estimated uncertainty in the tracked person's position estimate. This estimation is performed using a cooperative approach [1] similar to the one developed in an another previous in our group [6]. We validated the real-time efficacy of the proposed algorithm through several field experiments with 3 self-designed octocopters and simulation experiments in a realistic outdoor environmental setting with up to 16 robots.

Perception

The perception functionality of AirCap is split into two phases, namely, i) online data acquisition, and ii) offline pose and shape estimation.

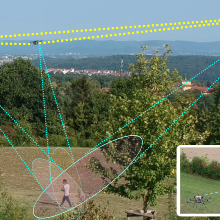

During the online data acquisition phase, the MAVs detect and track the 3D position of a subject while following them. To this end, they perform online and on-board detection using a deep neural network (DNN)-based detector. DNNs often fail at detecting small-scale objects or those that are far away from the camera, which are typical in scenarios with aerial robots. In our solution [1], the mutual world knowledge about the tracked person is jointly acquired by our multi-MAV system during cooperative person tracking. Leveraging this, our method actively selects the relevant region of interest (ROI) in images from each MAV that supplies the highest information content. Our method not only reduces the information loss incurred by down-sampling the high-res images, but also increases the chance of the tracked person being completely in the field of view (FOV) of all MAVs. The data acquired in the online data acquisition phase consists of images captured by all MAVs (see, for example, the left image above) and their estimated camera extrinsic and intrinsic parameters.

In the second phase, which is offline, human pose and shape as a function of time are estimated using only the acquired RGB images and the MAV's self-localization (the camera extrinsics). Using state-of-the-art methods like VNect and HMR, one obtains only a noisy 3D estimate of the human pose. Our approach [2] is to exploit multiple noisy 2D body joint detectors and noisy camera pose information. We then optimize for body shape, body pose, and camera extrinsics by fitting the SMPL body model to the 2D observations. This approach uses a strong body model to take low-level uncertainty into account and results in the first fully autonomous flying mocap system.

Ziele und Aufgaben

AirCap's goal is to achieve markerless, unconstrained, human motion capture (mocap) in unknown and unstructured outdoor environments. To that end, our objectives are

- to developed an autonomous flying motion capture system using a team of aerial vehicles (MAVs) (robots) with only on-board, monocular RGB cameras [1] [3] [4].

- to use the images, captured by these robots, for human body pose and shape estimation with sufficiently high accuracy [2].

Publikationen

[1] Deep Neural Network-based Cooperative Visual Tracking through Multiple Micro Aerial Vehicles, Price, E., Lawless, G., Ludwig, R., Martinovic, I., Buelthoff, H. H., Black, M. J., Ahmad, A., IEEE Robotics and Automation Letters, Robotics and Automation Letters, 3(4):3193-3200, IEEE, October 2018, Also accepted and presented in the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS).

[2] Markerless Outdoor Human Motion Capture Using Multiple Autonomous Micro Aerial Vehicles, Saini, N., Price, E., Tallamraju, R., Enficiaud, R., Ludwig, R., Martinović, I., Ahmad, A., Black, M., Proceedings 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pages: 823-832, IEEE, October 2019

[3] Active Perception based Formation Control for Multiple Aerial Vehicles, Tallamraju, R., Price, E., Ludwig, R., Karlapalem, K., Bülthoff, H. H., Black, M. J., Ahmad, A., IEEE Robotics and Automation Letters, Robotics and Automation Letters, 4(4):4491-4498, IEEE, October 2019

[4] AirCap – Aerial Outdoor Motion Capture, Ahmad, A., Price, E., Tallamraju, R., Saini, N., Lawless, G., Ludwig, R., Martinovic, I., Bülthoff, H. H., Black, M. J.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2019), Workshop on Aerial Swarms, November 2019.

[5] Decentralized MPC based Obstacle Avoidance for Multi-Robot Target Tracking Scenarios, Tallamraju, R., Rajappa, S., Black, M. J., Karlapalem, K., Ahmad, A.

2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), pages: 1-8, IEEE, August 2018

[6] An Online Scalable Approach to Unified Multirobot Cooperative Localization and Object Tracking, Ahmad, A., Lawless, G., Lima, P., IEEE Transactions on Robotics (T-RO), 33, pages: 1184 - 1199, October 2017

Aamir Ahmad

Jun.-Prof. Dr.-Ing.Stellv. Institutsleiter

Nitin Saini

M. Sc.